Get details on our recent survey on the security of AI-generated code.

What’s hype and what’s real when it comes to securing AI-generated code? We recently partnered with Gatepoint Research to get a reality check. We surveyed 117 security professionals about their concerns, plans for, and thoughts about securing AI-generated code.

The main takeaways?

- AI assistants are, not surprisingly, playing a major role in most organizations’ software development processes.

- Vulnerabilities and lack of visibility are security professionals’ top concerns related to AI-led development.

- Securing AI-generated code is a priority and a budget line item for most.

- AI is emerging as a major disruptor to application security.

AI coding assistants have gone mainstream

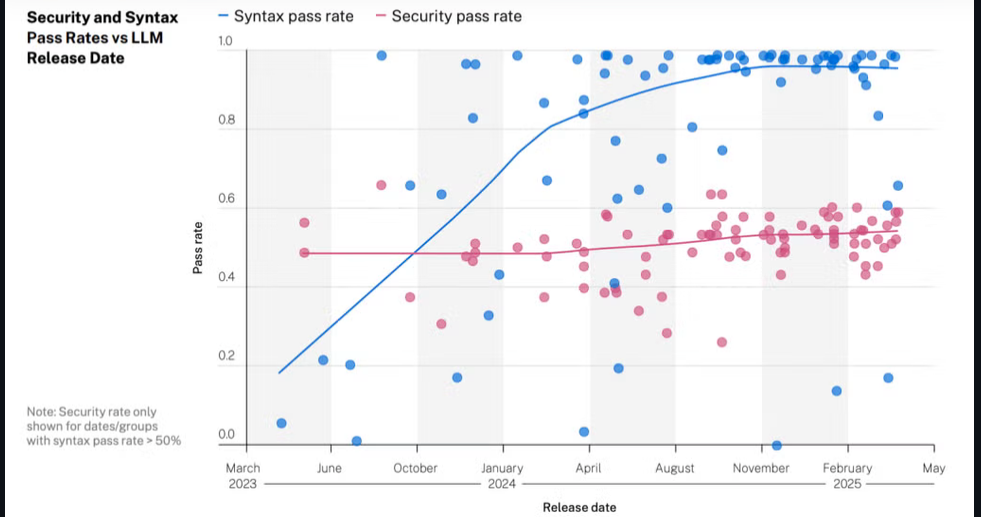

82% of respondents are experimenting with or using AI assistants in software development. What are the implications of this shift? It will certainly increase development velocity, and therefore output. The problem is that the ability to assess that code for security won’t be able to keep up. And numerous studies reveal that the code AI is producing is optimized for functionality, not security. For example, the image below from a recent Veracode report highlights how the functionality of AI-generated code has improved over time, but its security has not.

Source: Veracode

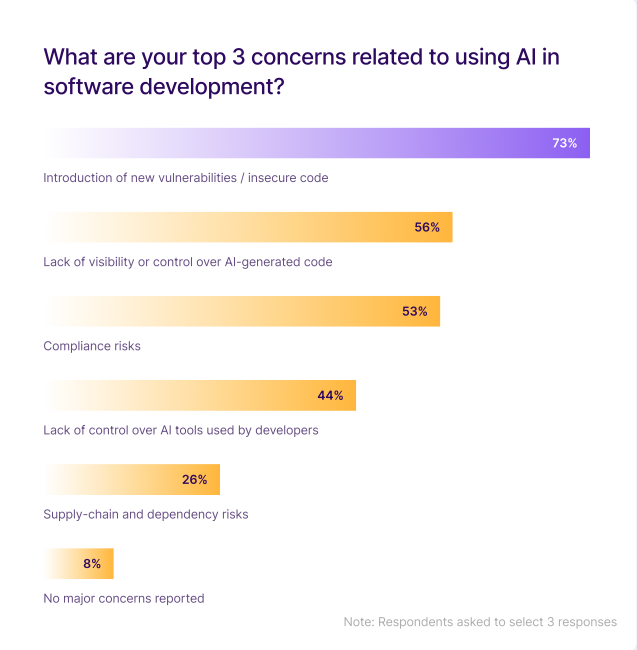

Visibility of and vulnerabilities in AI-generated code are security team concerns

73% of respondents cite introduction of new vulnerabilities as a top concern related to AI use in software development. 56% cite lack of visibility or control over AI-generated code as a top concern.

We hear these same concerns emerge in our conversations with security teams as well. And they’re certainly valid. AI-generated code introduces novel vulnerabilities traditional AppSec tools were not designed to identify or address. We’re seeing early examples of model manipulation attacks, prompt injections into AI-driven apps, poisoned training data, and malicious model updates starting to crop up, and it’s only going to get worse.

The AI assistants themselves can introduce vulnerabilities as well. The Legit research team recently discovered significant vulnerabilities in GitLab’s coding assistant Duo and in GitHub CoPilot. The team uncovered a remote prompt injection vulnerability in GitLab Duo that would allow attackers to steal source code from private projects, manipulate code suggestions shown to other users, and even exfiltrate confidential, undisclosed zero-day vulnerabilities. The GitHub CoPilot vulnerability allowed silent exfiltration of secrets and source code from private repos, and gave the research team full control over Copilot’s responses, including suggesting malicious code or links.

Visibility into where and how developers are using AI is a major concern for almost every security professional we talk to today. Security teams are struggling to get the visibility they need to assess the security of the AI development tools and their output. At the same time, AI visibility is now a key part of AppSec. The ability to identify AI-generated code, and where and how AI is in use in your software development environment has become critical.

Securing AI-generated code is becoming a priority with budget behind it

24% of respondents cite securing AI-generated code is their TOP priority in the next 12-24 months. 49% cite it as a high priority in the same time frame. Three-quarters note they either have budget for securing AI-generated code in 2025-2026, or are planning to allocate it.

AI may seem like a buzzword, but the reality is that securing AI-generated code is clearly a priority for security teams today, and most have the budget to put a plan in action.

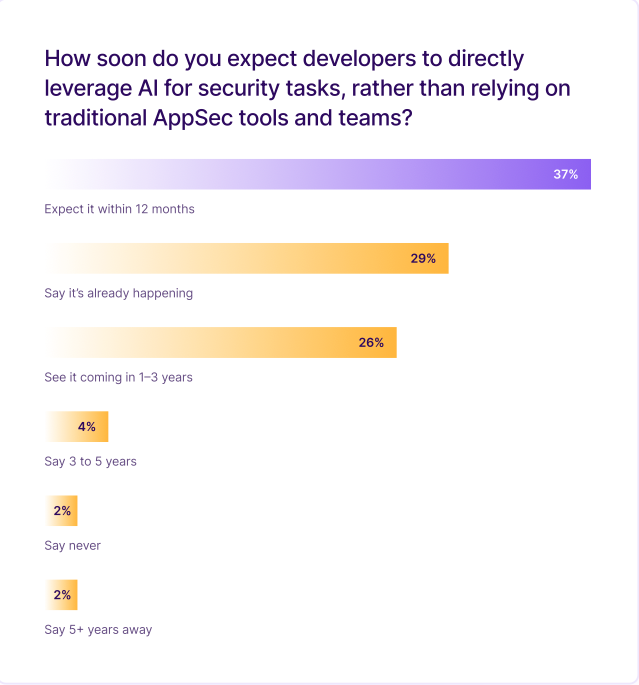

Most see AI transforming AppSec in the near term

Most application security tools emerged long before AI took the reins in software development. Will they be able to keep up? Can they adapt to this new reality, or will an entirely new set of tools be needed?

Most respondents say they expect the latter – purpose-built AI security tools integrated into AI IDEs – will be the evolution of AppSec. And almost two-thirds say that future is here now. 29% of respondents say their development teams are currently leveraging AI for security tasks, rather than relying on traditional AppSec tools. And three-quarters of security professionals note that they are experimenting with or actively using AI agents to extend their AppSec teams.

AI is transforming almost all aspect of life at a lightning-fast pace, and AppSec is clearly no exception.

Find out how we are helping enterprises like yours secure AI-generated code.

Get full survey results and details

See our new report, "Reality Check on Securing AI-Generated Code," for full survey results and analysis (no form!).

Download our new whitepaper.