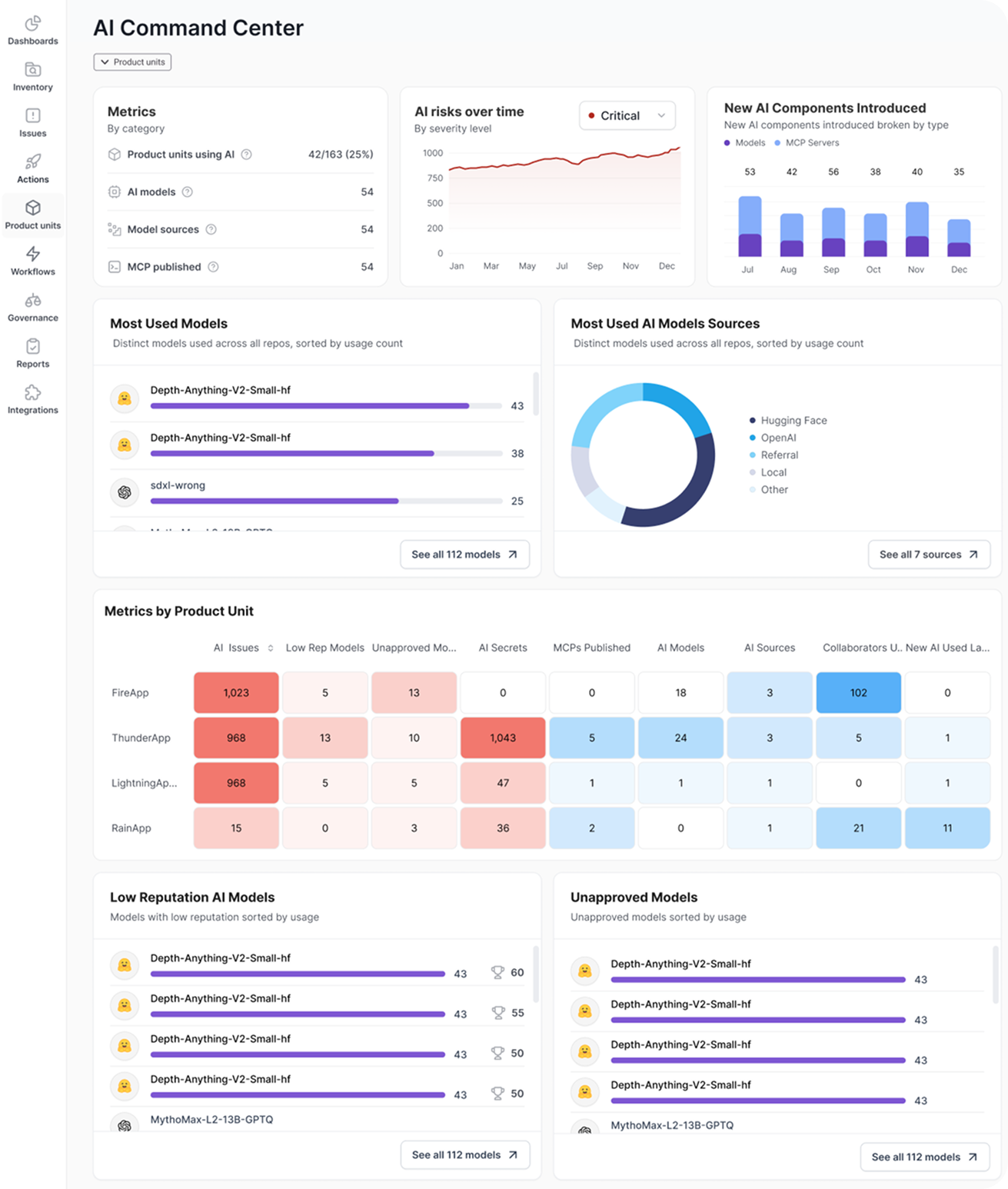

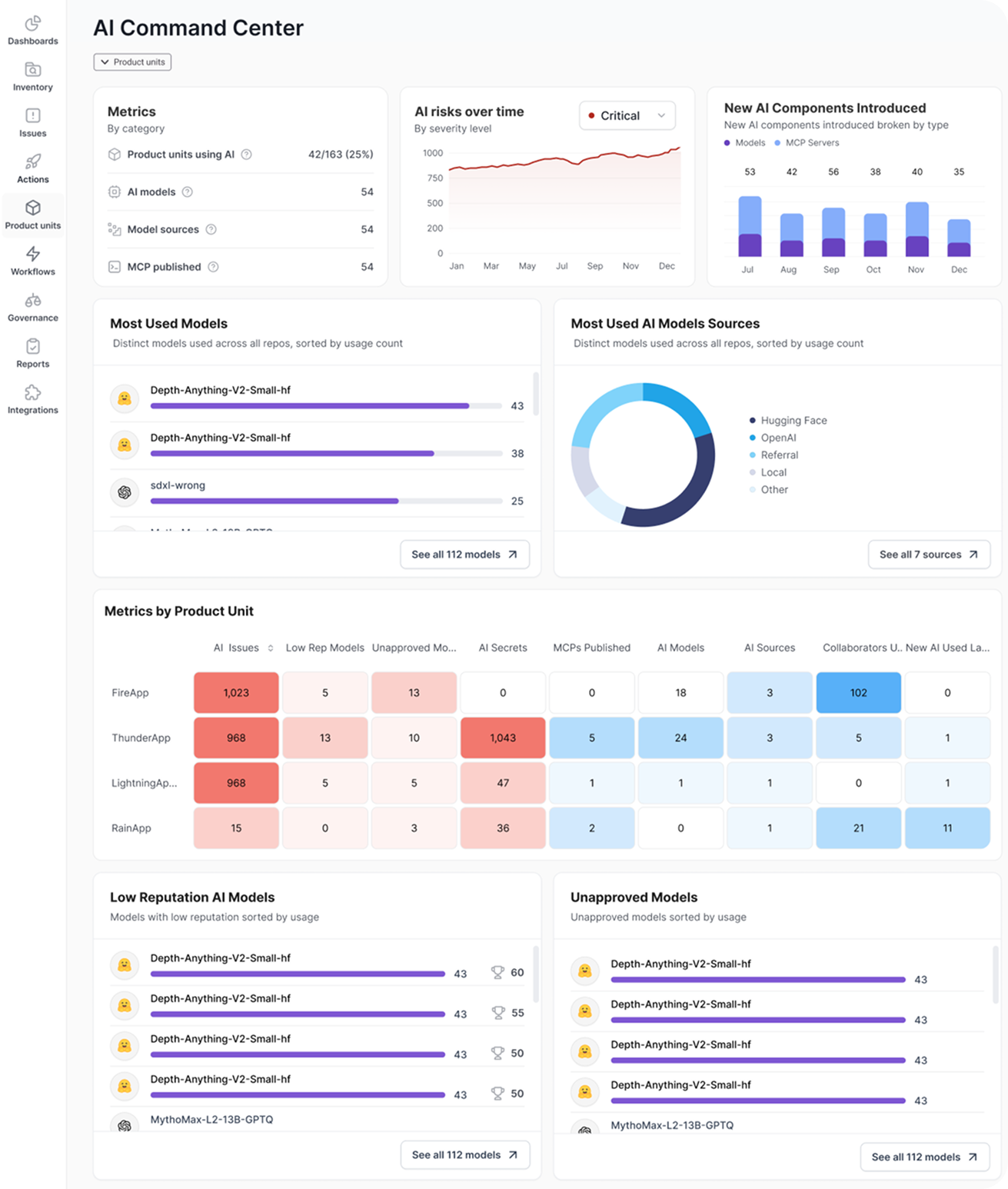

Key Features of Legit’s AI Security Command Center

Legit’s AI Security Command Center provides the most comprehensive view of when, where, and how engineering leverages AI in software development, along with the associated risks.

Legit is an AI-native ASPM platform that automates AppSec issue discovery, prioritization, and remediation. A trusted ASPM vendor for your AppSec and software supply chain security programs.

AI is revolutionizing development - making it faster, smarter, and more autonomous. It’s also rewriting the rules of application security. Traditional AppSec tools weren’t designed for AI-driven dev processes. Legit is here to help.

Developers are adopting AI models, code assistants, and MCP servers at a record pace – often out of the view of security. In addition, security leaders lack key metrics to understand the state of AI security within development. Legit’s AI Security Command Center shines a spotlight on AI, uncovering usage, risk, and metrics that matter to the business.

The massive benefits brought to development through AI code assistants, vibe coding platforms, and MCP servers, among other AI tools, can’t be understated.

Engineers deliver much more – much faster.

But without the mechanisms to properly understand and govern AI usage across software development, the risks may quickly outweigh the benefits.

Unapproved and low-reputation models are often trained on insecure code bases and lack security guardrails.

Engineering’s use of AI tools may inadvertently expose secrets and other sensitive data.

Unauthorized MCP servers risk exposing sensitive data, enabling unauthorized AI agent actions.

Complex and varied AI risks – from policy violations to posture changes over time – are often overlooked.

Discover how to gain complete visibility and control over AI-generated code, models and MCP servers

across your SDLC.

Legit’s AI Security Command Center provides the most comprehensive view of when, where, and how engineering leverages AI in software development, along with the associated risks.

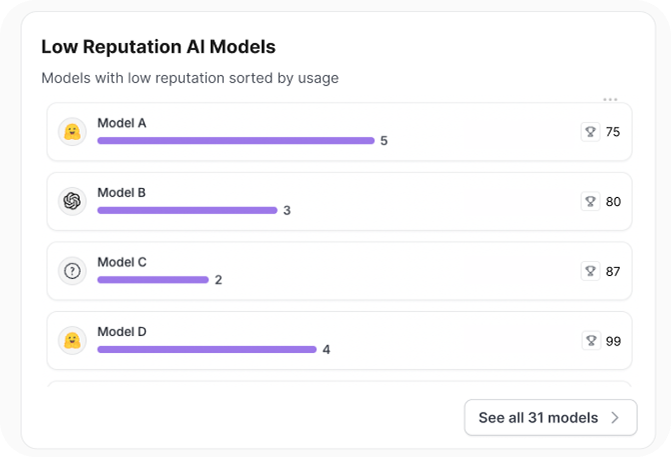

Users instantly see the AI models and MCP servers in their engineering environments, along with areas of risk that must be remediated. The platform also highlights newly introduced components, tracks the most frequently used models, and enriches this view with the context of each AI model’s reputation.

Low-reputation AI models or those unapproved by corporate policy create a significant opportunity to introduce risk, especially if they were trained on insecure codebases or lack security guardrails. Legit’s AI Security Command Center delivers an immediate view of models in use, even when an engineer attempts to bypass security processes and policies.

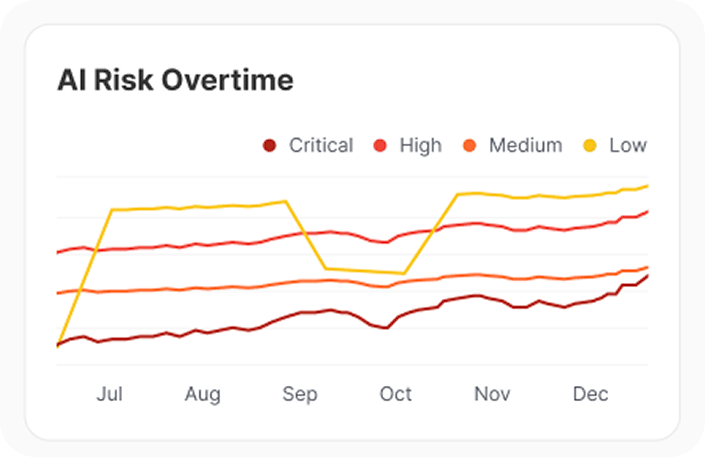

Beyond use of AI, Legit monitors AI-related risks in real-time, including riskiest AI secrets, top AI risk by policy, and the change in AI risk over time. For security teams, this provides a clear mechanism to understand and communicate the impact of AI on the organization’s security posture.

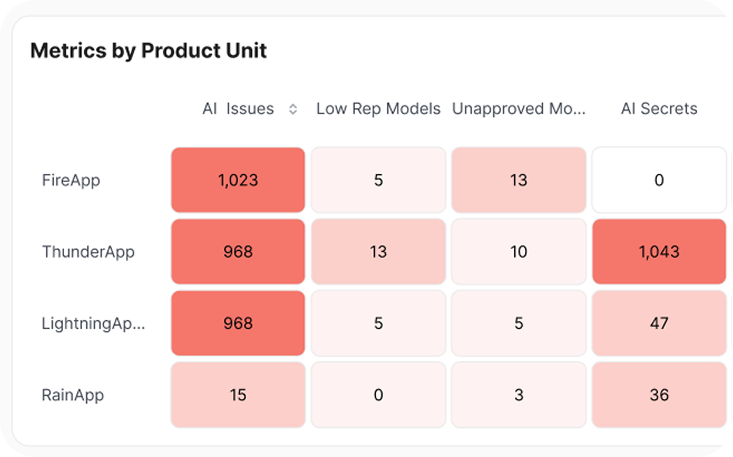

While AI usage is accelerating, developers’ expertise in these tools may be limited. Legit’s new AI heat map makes it easy to pinpoint teams that introduce the most AI security issues, and to compare AI security across application teams, to quickly identify where training or other support is needed most.

Demo Legit's AI Security Command Center

Watch a demo of the Legit AI Security Command Center.

.jpg?width=2000&height=1045&name=Blog-Image-Reality%20Check%20on%20Securing%20AI-Generated%20Code-2%20(1).jpg)

We surveyed 117 security professionals to understand their pains, priorities, and plans surrounding AI-led software development.

Understand the new AppSec requirements when AI writes code.

Request a demo including the option to analyze your own software supply chain.

Request a Demo