Find out how Legit is giving organizations the visibility, control, and protection needed to safely adopt AI coding agents without sacrificing security or compliance.

AI Is Writing the Code, but Who’s Securing It?

AI coding agents like GitHub Copilot and others have quickly become part of every developer’s daily workflow, dramatically accelerating productivity and code delivery. But while AI has revolutionized how we write software, it has also introduced a new wave of security, governance, and visibility challenges that organizations are struggling to keep up with.

At Legit, we’ve seen firsthand what happens when AI-generated code enters production:

- More vulnerabilities. Research and customer data show that AI-generated code contains significantly more security flaws than human-written code. AI models optimize for functionality, not security. As developers shift from writing code to reviewing it, subtle vulnerabilities are more likely to slip through unnoticed.

- Risky AI ecosystems. Coding agents rely on models and MCPs that can be vulnerable, exposed, or even malicious, creating new data-leak and supply chain risks. Securing this layer also means hardening IDE environments - ensuring AI agents don’t have access to sensitive data, secrets, or configuration files that could be unintentionally exposed.

Introducing Legit VibeGuard

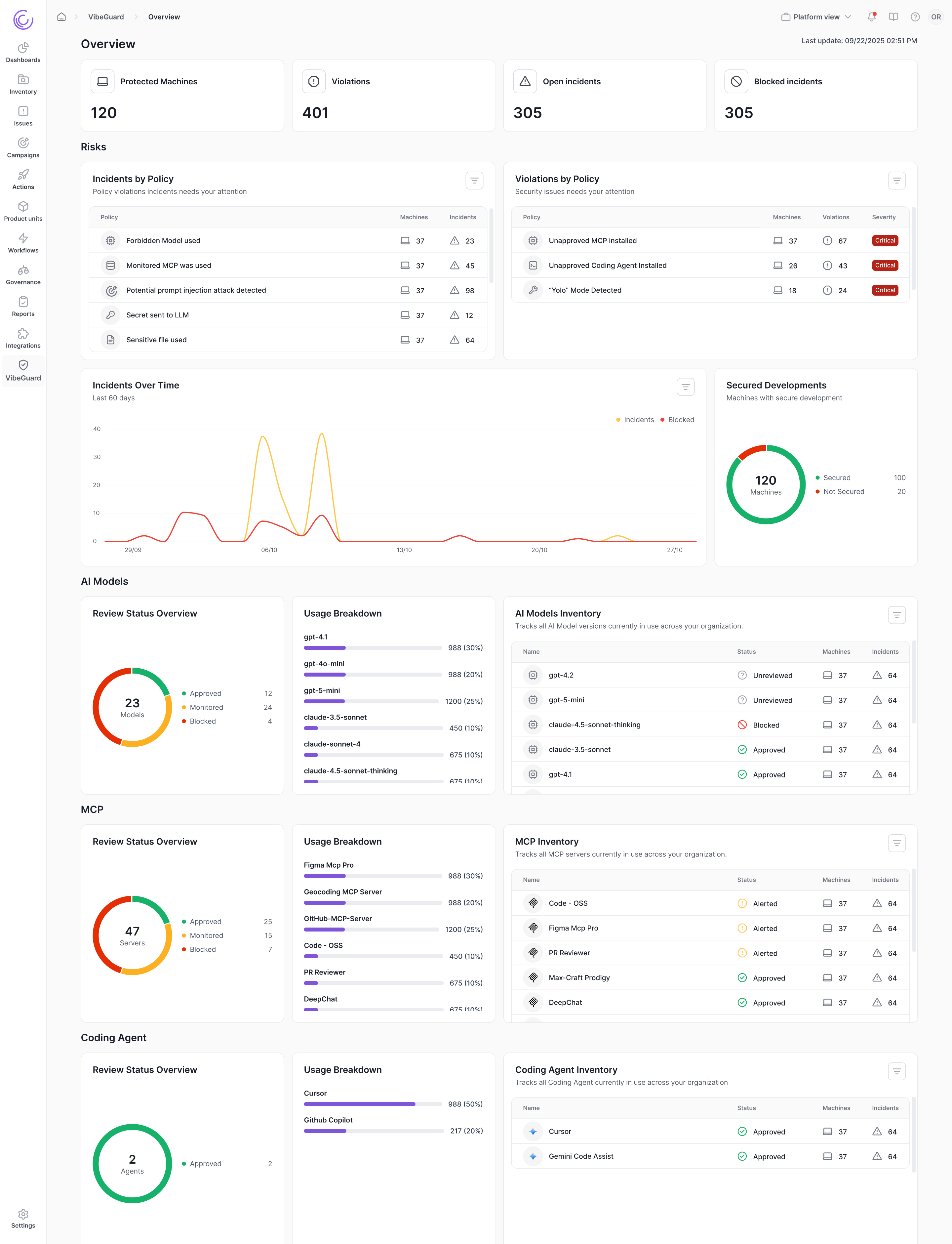

Legit VibeGuard is the first platform designed to secure and govern AI code generation directly in the IDE, at the very beginning of the software development process. It gives organizations the visibility, control, and protection needed to safely adopt AI coding agents withoutsacrificing security or compliance.

VibeGuard continuously monitors AI activity, prevents prompt injection attacks, and stops vulnerabilities before they ever reach production.

With VibeGuard, you can:

- Secure AI-generated code at the source. Automatically scan for vulnerabilities (SAST, SCA) before code ever leaves the IDE.

- Set AI security guardrails. Prevent code leaks, restrict access to sensitive files, and enforce safe AI configurations.

- Gain full visibility and control. Discover every AI assistant, model, and MCP in use across developer endpoints.

- Block unsafe or malicious AI tools. Detect and stop the use of vulnerable or compromised AI components.

- Prevent data leaks in real time. Identify and block secrets or sensitive data being exposed to AI tools as developers code.

How VibeGuard Works

VibeGuard is integrated directly into IDEs and AI coding agents such as GitHub Copilot and Cursor, and connects to a centralized management console. It continuously monitors prompts, models, MCPs, and code generation behaviors to detect and block risky activity in real time.

From a single dashboard, security and platform teams can:

Guide AI agents with security instruction files: From the VibeGuard platform, security teams can attach instruction files to code agents — acting like an additional built-in prompt that guides how AI generates code. These instructions apply secure-coding best practices for each tech stack and can be customized to match an organization’s specific policies and standards.

Gain visibility and governance over AI usage: VibeGuard provides a complete inventory of all AI tools, models, and MCPs in use across developer environments. Security teams can view what’s active, evaluate each component’s reputation score, and decide whether to approve, block, or flag it for review, ensuring only trusted and compliant technologies are used in development.

Apply policy-based security controls and guardrails: Organizations can define security policies that block the use of unapproved AI models or external MCPs based on company policy. VibeGuard also enforces configuration guardrails, for instance, alerting when a developer switches their coding assistant into an unsecured or “YOLO” mode. With these guardrails, teams can quickly identify and correct unsafe setups before they create risk.

Harden coding environments: VibeGuard lets organizations define and enforce what AI coding agents can access. For example, organizations can restrict AI coding agents from accessing files that commonly contain secrets or credentials, such as environment files (.env*), cloud credentials (aws/credentials, azure/credentials), or secret configuration paths (/config/secrets/*), preventing sensitive data from being exposed during code generation.

The result is a continuous layer of proactive protection that ensures security keeps pace with the rapid delivery of software.

VibeGuard Redefines AI-Native Security in Three Key Ways

- Secures AI-generated code at creation.

Moves AppSec from after-the-fact testing to proactive protection built directly into AI development workflows. Legit trains AI agents using policy-based controls, secure-coding rules, and guardrails that ensure generated code meets security standards before it leaves the IDE. - Protects and governs AI coding agents.

Monitors and secures agents’ use of models, MCPs, and sensitive data, while blocking prompt-injection attacks and enforcing compliance across the entire fleet of coding assistants. - Brings visibility and control to AI-driven development.

Provides security teams with complete transparency into every AI model, extension, and agent running inside developer IDEs — delivering the same level of control they expect from traditional AppSec, but built for the AI era.

Secure the Future of AI-Driven Development

The rise of AI coding agents is revolutionizing how we build software — but innovation must go hand in hand with security.

Legit VibeGuard ensures that AI-driven development remains fast, intelligent, and safe.

👉 Learn more and see VibeGuard in action at https://www.legitsecurity.com/vibeguard-resource-hub.

Find out how we are helping enterprises like yours secure AI-generated code.

Download our new whitepaper.