Get details on 4 new AppSec requirements in the AI-led software development era.

We all know AI is transforming software development, and software security. But in the midst of all the hype, fear, and information overload, what are the top 4 AppSec steps you should focus on today?

Our experts recommend the following:

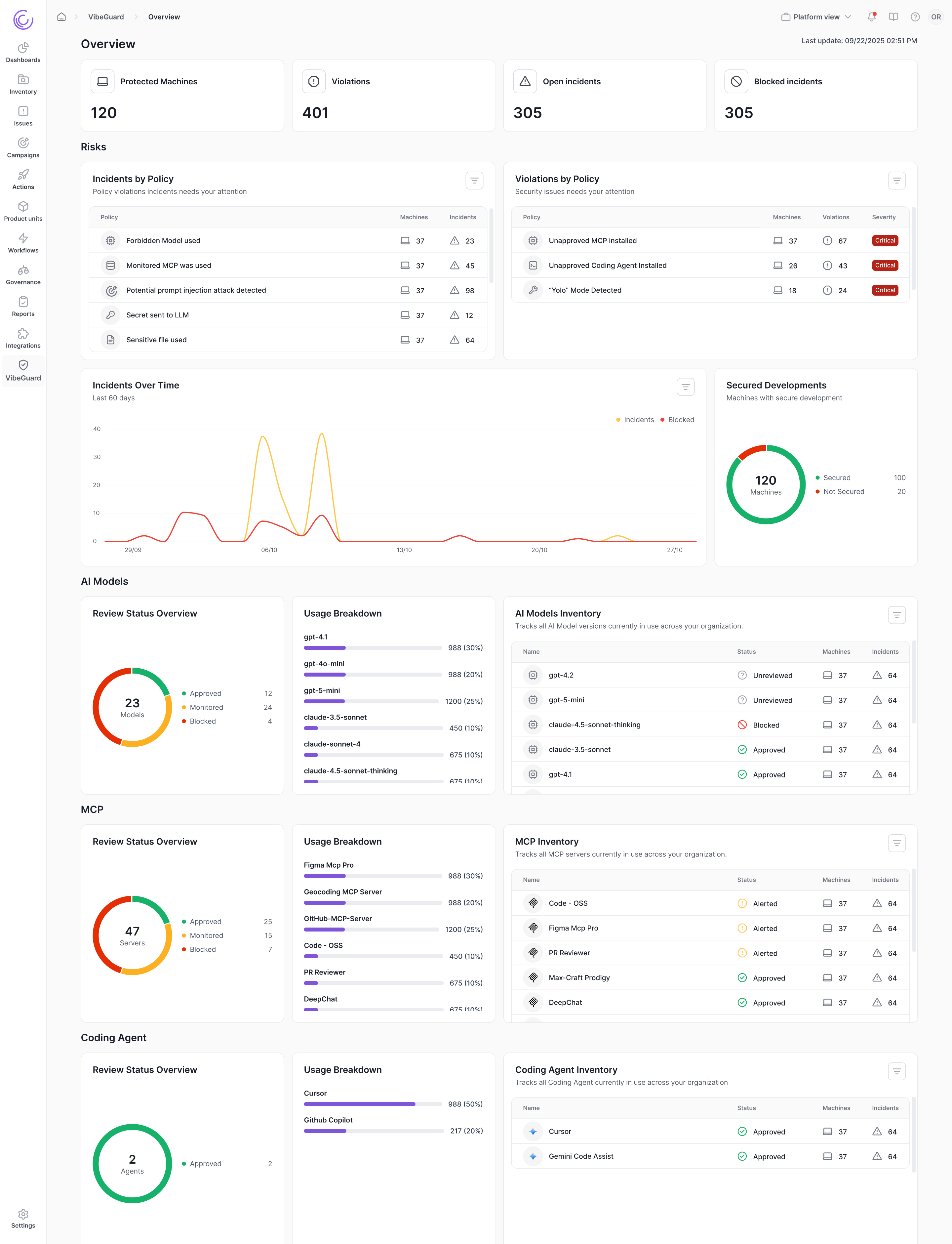

AI discovery

AI visibility is now a key part of AppSec. The ability to identify AI-generated code, and where and how AI is in use in your software development environment has become critical.

You want to both discover AI in your environment, and create governance around how it’s used.

What exactly do you need to discover? Ultimately, all AI elements in your development environment – every model your developers are creating, every MCP they are using, and other components like AI services.

In addition, what AI-generating tools are in use? Cursor? Copilot? You’ll need to apply governance around these tools as well.

AI-specific security testing

AI-specific security testing has become vital as well. AI brings in some novel vulnerabilities and weaknesses that traditional scanners can’t find, such as training model poisoning, excessive agency, or others detailed in OWASP’s LLM & Gen AI Top 10.

You also now need the ability to identify low-reputation or malicious AI models in use.

Threat modeling

As the risk to the organization is changing, so too must threat models. If your app now exposes AI interfaces, is running an agent, or gets input from users and uses the model to process it, you’ve got new risks.

Legit’s Advanced Code Change Management plays a role here. It can detect when a team is introducing a new AI component to their app, then alert the right people to threat model the app before it’s too late. You don’t want to discover a chatbot without the proper guardrails after it’s been deployed for months.

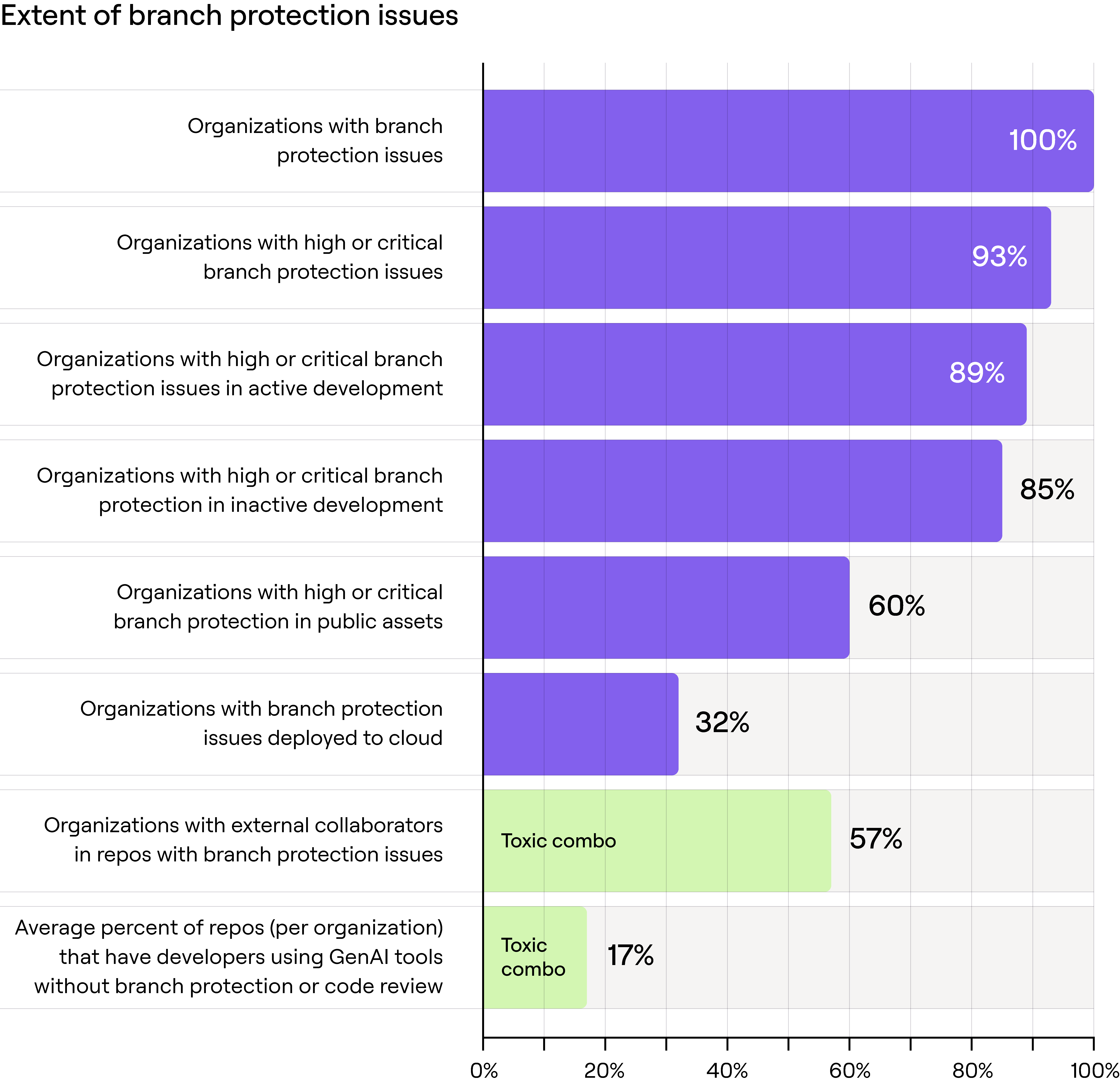

Awareness of toxic combinations

The use of AI in code development itself is not necessarily a risk. But when its use is combined with another risk, like lack of static analysis or branch protection, the risk level rises.

For instance, research for our 2025 State of Application Risk report revealed that, on average, 17% of repos per organization have developers using GenAI tools PLUS lack of branch protection or code review.

These “toxic combinations” require both discovering which development pipelines are using GenAI to create code, and then ensuring those pipelines have all the appropriate security measures and guardrails in place.

Learn more

Get more details on AppSec in the Age of AI in our new whitepaper.

Download our new whitepaper.